Recently updated on June 3, 2025

With an increasing reliance on AI in the fintech industry and other sectors comes a significant challenge — bias. But what is bias in AI?

From errors in identifying individuals with darker skin tones to hiring algorithms that favor men over women, bias in artificial intelligence isn’t just a theoretical issue; it’s already playing a role in perpetuating stereotypes.

AI can develop bias mainly through real-world training data that reflects social inequalities and discrimination or unbalanced data that favors privileged groups.

This article explores how AI inherits prejudices, the risks it poses, and what can be done to ensure fairness in artificial intelligence.

What is AI Bias?

AI bias refers to systematic errors in artificial intelligence systems that result in unfair or discriminatory outcomes. These errors can appear at any stage of AI development, either stemming from biased training data, prejudiced hypotheses in algorithms, or unintended consequences of model deployment.

Here are some examples of AI bias in real programs.

Researcher Joy Buolamwini’s work revealed that commercial facial recognition systems had higher error rates when identifying individuals with darker skin tones, particularly women. These systems misidentified women with darker skin up to 34.7% of the time, while errors for lighter-skinned men occurred in only 0.8% of cases.

In 2017, Amazon discontinued an AI recruiting tool after discovering it wasn’t rating candidates in a gender-neutral way. The algorithm was trained on resumes submitted over a ten-year period, during which resumes mostly came from men. The system learned to prefer resumes that resembled past successful candidates.

A 2024 UNESCO and IRCAI study found that AI language models, including GPT-2, ChatGPT, and Llama 2, still exhibited gender biases despite mitigation efforts. One model frequently linked female names to domestic roles and male names to professional success, highlighting persistent biases in AI.

Types of AI Bias

AI biases generally fall under three categories: data, algorithmic, and deployment biases, based on where they appear in the development process. Let’s examine these examples of bias in AI:

Data Biases

Data bias happens when the dataset used to train an AI model is incomplete, unrepresentative, or skewed. It can arise from various sources:

Selection Bias: when the data used to train an AI system does not represent the entire population. For example, an AI system trained on data from one geographic region might not perform well for people outside that group.

Labeling Bias: when people apply their own judgement when categorizing data. For example, one annotator might consider aggressive political speech unacceptable in a dataset used for hate speech detection, while another might view it as a fair debate.

Measurement Bias: when incorrect or misleading metrics are used to evaluate an AI model’s performance. If an AI model for hiring is optimized solely for “cultural fit” without considering diversity, it may unintentionally favor people from similar backgrounds, reinforcing workplace homogeneity.

Reporting Bias: when the data sources that AI learns from don’t reflect actual conditions. This often leads to an overrepresentation of rare events in the data, like extreme opinions and unusual circumstances.

Confirmation Bias: when AI systems prioritize information that aligns with pre-existing beliefs. For instance, a social media recommendation algorithm may continually suggest content based on the user’s past interactions.

Algorithmic Biases

These biases come from an algorithm’s design, development, or functioning. For example, certain assumptions made in the design process, poorly chosen features, or how certain data types are prioritised:

Learning Bias: when the choice of model, its assumptions, or the optimization techniques used during training amplify disparities. For example, overly simplistic rules that don’t capture nuances in the data may lead to biases in more complex real-world scenarios.

Implicit Bias: when AI inherits unconscious biases from human developers, whether through data selection or flawed design choices. Even when developers do not intend to introduce bias, their own perspectives and assumptions can shape AI outcomes.

Deployment Biases

Deployment biases arise when an AI model is applied in real-world settings after it has been developed and trained. Here are some examples of bias in AI:

Automation Bias: when humans trust AI-generated recommendations even when they are incorrect. This can be dangerous in high-stakes applications such as healthcare or criminal justice, where blindly accepting the AI system’s decisions may lead to incorrect decisions.

Feedback Bias: when a model’s outcomes and predictions are distorted due to a feedback loop. This occurs when the model’s outputs impact the inputs it later receives, creating a cycle that reflects and even magnifies the initial prejudice in the system.

Why AI Becomes Biased

Although most bias in AI examples demonstrate data-related root causes, AI experts must learn to recognize the potential for bias in all aspects of AI development.

Data-Related Bias

- Incomplete, imbalanced, or historically biased data

- Poor data collection

- Underrepresentation of certain groups

- Historical prejudices in datasets

Algorithmic Bias

- Certain patterns in data favored over others by an algorithm

- Flawed weighting of features

- Incorrect assumptions in model design

- Lack of fairness constraints

Human Bias in AI Development

- Biases from developers, researchers, or organizations

- Decisions about data selection, labeling, and model objectives

- Unintentional favoritism or exclusion

Feedback Loop Bias

- AI systems that continuously learn from user interactions

- Biased results influence user behavior, and AI learns from those behaviors

- Self-perpetuating bias

Bias in Model Training and Testing

- Biased datasets

- Limited test conditions (certain demographic groups, languages, environments etc.)

- Testing with historical biases

- Overfitting to test data

- Lack of rigorous evaluation

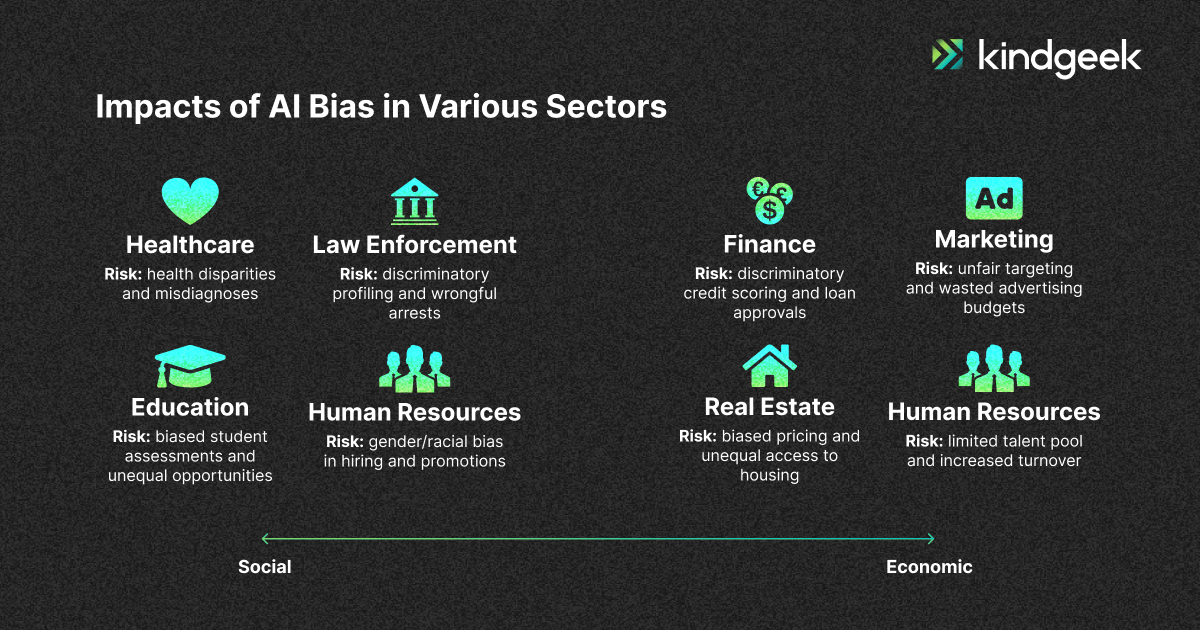

Impacts of AI Bias in Various Sectors

Let’s explore how artificial intelligence bias examples we’ve covered may impact various industries:

Social Impacts

Healthcare: AI bias can lead to unequal access to care when algorithms favor certain demographics over others, affecting diagnosis, treatment, and outcomes.

Law Enforcement: Biased AI systems in predictive policing or facial recognition can disproportionately target marginalized communities, leading to systemic discrimination. This may even lead to discriminatory profiling, false positives and erode public trust.

Education: AI-driven admissions or grading algorithms can disadvantage underrepresented students, further widening existing educational achievement gaps.

Human Resources: AI bias in recruitment processes can lead to unfair treatment of candidates due to their gender, ethnicity, or other protected characteristics, which can negatively impact diversity and inclusion efforts.

Economic Impacts

Finance: Certain populations may be unable to obtain financial services due to bias in AI algorithms. For example, an applicant’s creditworthiness might be misjudged due to biased training data. This can limit access to capital and opportunities for wealth-building.

Marketing: AI biases in consumer profiling raise concerns about unfair targeting, missed opportunities in larger market segments, and wasted advertising budgets.

Real Estate: AI-driven property valuations can be skewed, leading to biased pricing or unequal access to housing.

Human Resources: Besides the social impact, AI bias in hiring can hurt a company’s economic growth by limiting the talent pool and potentially increasing turnover if discrimination is perceived.

While AI can introduce bias in some areas, it also offers significant benefits when applied responsibly. For example, one of the key benefits of using a chatbot for customer service is its ability to provide 24/7 support and handle a high volume of inquiries. However, even in this area, it’s essential to ensure that chatbots are designed without biases.

How to Prevent AI Bias

Understanding the AI bias meaning and recognizing its root causes and complications leads to the question: what can be done to prevent it?

Organizations can take several key steps to minimize its impact:

Using Diverse and Representative Data

Collecting data from various sources to reflect different demographics and underrepresented groups can help AI systems make more accurate and fair decisions.

Human review and collaboration are essential in tasks like data labeling, as automated processes cannot replace them.

Implementing Continuous Monitoring and Bias Audits

Organizations should regularly monitor and test AI models for biases, as they are prone to evolve over time. Using fairness metrics in bias audits can detect hidden biases and refine models to be more equitable.

Incorporating Human Review

AI should support (not replace) human judgment, especially in high-stakes decisions. Having diverse teams review AI outputs and flag potential biases can help catch biases that AI might miss.

Implementing override mechanisms to correct biased decisions allows humans to step in when the system makes biased decisions. This process establishes an ongoing feedback loop, where the system continuously learns and enhances its performance with each iteration.

Consider Kindgeek Your Trusted Partner

At Kindgeek, we specialize in helping businesses unlock AI’s full potential. Our AI digital transformation service offers a practical approach to AI adoption, considering your unique needs.

If you’re looking to develop a solution to fit your use cases or require a technical audit of an existing AI model, you can rely on our expertise to make it work for your business.

We follow a component-based approach and assemble readily available building blocks to shape your custom business-specific solution. We ensure faster deployment times and cost efficiency while maintaining the highest quality standards.

Conclusion

Will AI ever be unbiased? Well, it’s unlikely, at least in the foreseeable future. AI models learn from human-generated data, and since human society is inherently biased, those biases inevitably influence AI systems.

However, careful data curation, algorithmic adjustments, and ongoing monitoring can drive positive change over time. Techniques like fairness-aware machine learning, bias audits, and human oversight can help mitigate harmful biases and ensure AI systems are as impartial as possible, even if they cannot eliminate bias entirely.