Recently updated on October 31, 2024

For this article, we had a talk with Michael Kramarenko, our CTO, to examine both the hype and criticism aspects of ChatGPT with real-world examples and cases. Here, we’ll also delve into what makes ChatGPT such a groundbreaking tool, and of course, we’ll try to figure out whether to use it or rather stay aside

Introduction

Whether we embrace it or not, our world is already shaped by the presence of ChatGPT and similar tools.

But like any new tech, these GPT-like tools offer both benefits and risks that we cannot disregard. Hence, the logical question arises, “To be, or not to be?” “To ChatGPT, or not to ChatGPT?”.

Delve into this article to learn some intriguing facts about ChatGPT and have large language models explained superficially.

Content

- Debunking common ChatGPT myths

- Decoding hype around ChatGPT

- What makes ChatGPT so revolutionary?

- Navigating ChatGPT Criticism

- So, to ChatGPT or not to ChatGPT?

Debunking common ChatGPT myths

Myth 1: ChatGPT is new

ChatGPT is a chatbot – a type of conversational AI built – but on top of a Large Language Model. What is LLM in AI? It is a specific type of machine learning model that processes, understands, and generates human language. These models are distinguished by their large number of parameters—typically in the billions—and the vast datasets on which they are trained.

Although having boomed in late 2022, the technology behind ChatGPT – Transformer-based LM/LLM + self-attention mechanisms – was originally invented by the Google Brain team in 2017. However, it is somewhat surprising that eventually they have not capitalized on this innovation.

On the other hand, OpenAI pursued the idea and invested lots of time and effort to arrive at the point where they are now. And with it, perhaps tens of millions of dollars just to train the model. Not to mention daily computing expenses just to keep the model running.

Myth 2: ChatGPT understands language the way humans do

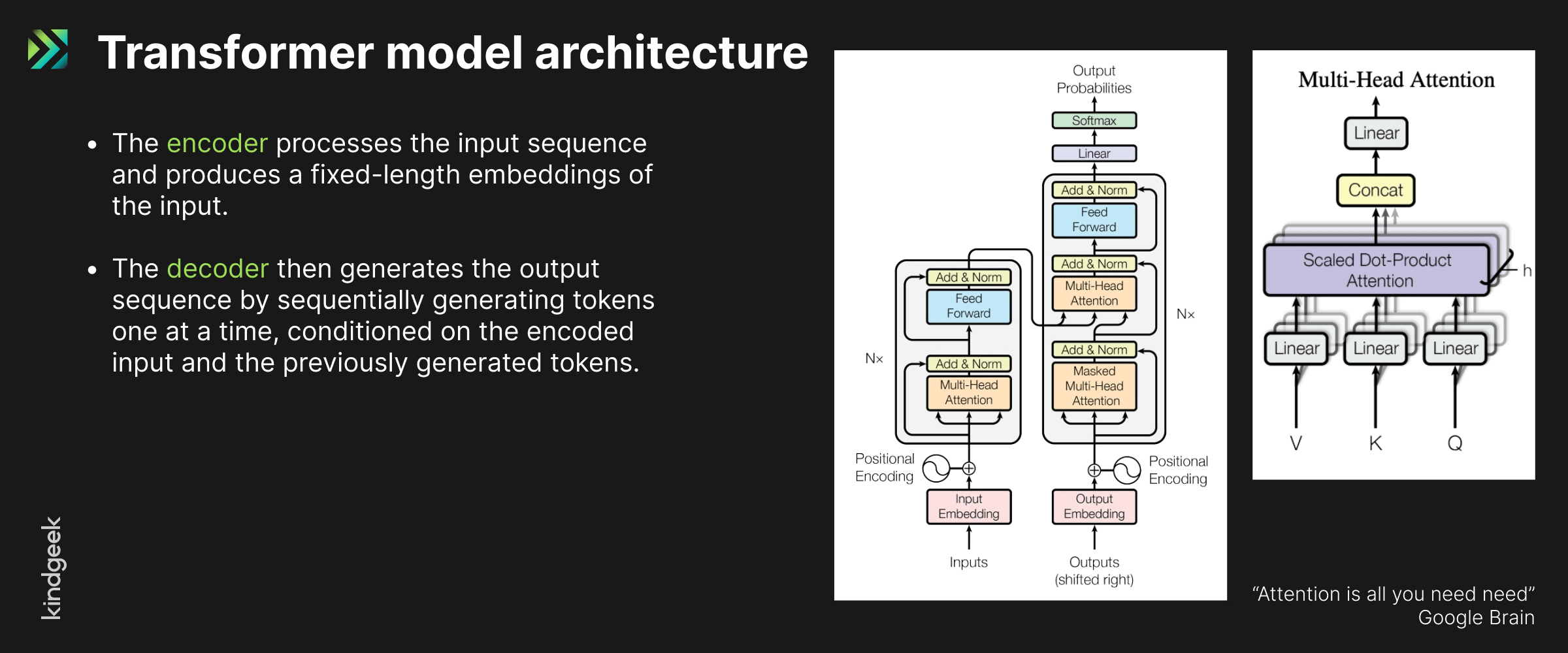

While ChatGPT can generate impressive responses, using words like “understanding,” “feeling,” and “sense” to describe a communication process with GPT-based tools is incorrect. GPT is about parsing text to tokens, producing fixed-length embeddings, and generating the output sequence conditioned on the encoded input where does ChatGPT get its information from, and the previously generated tokens.

ChatGPT’s ability to engage in conversations stems from its advanced capabilities to predict the most suitable next word or phrase based on the context of the input, and chatgpt training data size.

Thus, its responses are rather based on probabilities than personal feelings or subjective understanding. ChatGPT is just a sophisticated “word guesser,” so to speak.

Myth 3: ChatGPT will replace people.

ChatGPT has its limitations and can rather serve as a routine task performer and helper, not a problem solver.

But ultimately, only human workers are held accountable for the decision-making part and the outcomes it entails, as GPT-based tools are not infallible.

The tool can significantly enhance productivity and efficiency, helping do information processing tasks better and faster. The example of this may be an ai-powered assistant for fintech. It provides a quick, personalized, and human-like service to build strong customer relationships that propel business growth and help maximize revenue potential. This assistant allows humans to focus on more complex tasks.

Most disruptive technologies are changing professions and the labor market. It’s worth mentioning the times when “computers wore skirts” – here we’re talking about NASA Langley’s computer workers, who later retrained as programmers.

To sum up, only time will tell what the future of the labor market would look like.

Decoding hype around ChatGPT

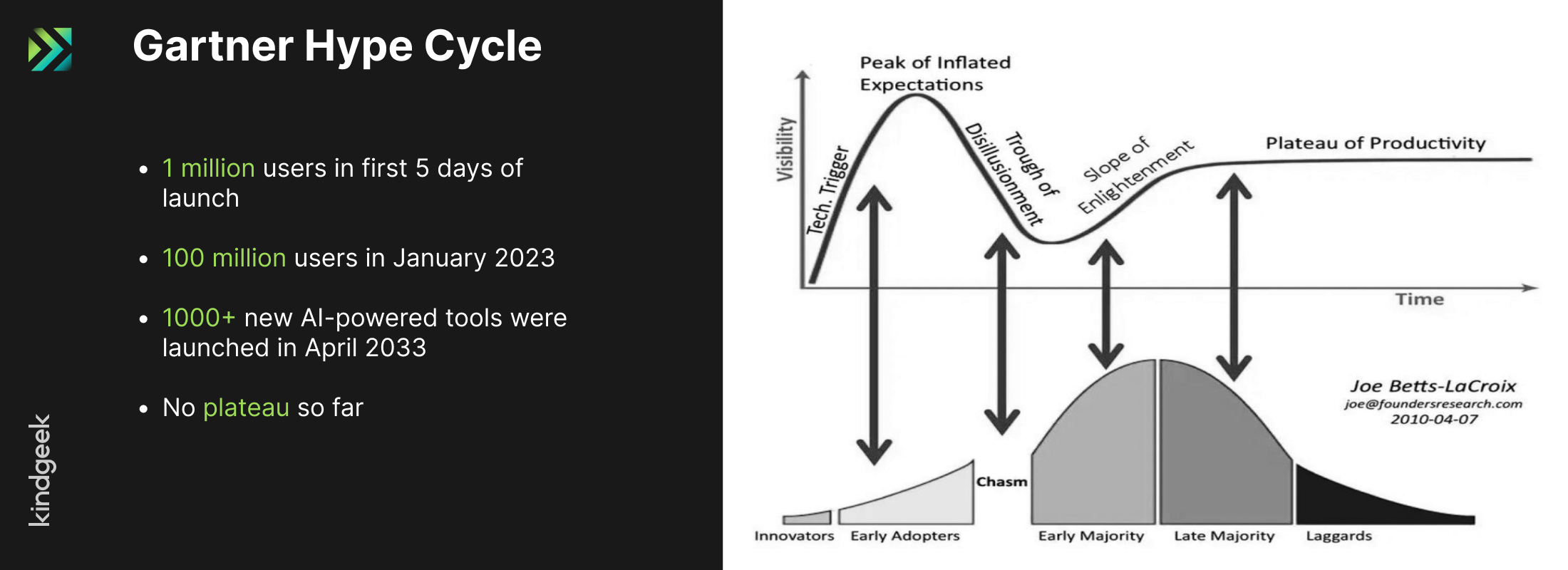

The hype surrounding ChatGPT is characterized by the enthusiasm of millions of people who have eagerly signed up for it. And while social media is abuzz with public awe, each passing day ushers in a plethora of new plugins and extensions.

Therefore, if we consider the Gartner hype cycle within present-day reality, it becomes progressively challenging not only to define a plateau point of ChatGPT but at least to see any degree of enlightenment so far.

Productivity boosting: a key aspect of public hype

While ChatGPT has garnered attention for its impressive capabilities, its underlying technology is set to transform the professional, legislative, educational, and social aspects of our lives for its superb ability to enhance human productivity and efficiency across various domains.

Recent studies have found that AI assistance increases customer service worker productivity by a prominent 14% and empowers least-skilled workers to complete their tasks 35% faster. Remarkably, agents with 2 months of experience aided by AI performed just as well or better than those with 6 months of experience without artificial intelligence.

Thus, productivity boosting is the main benefit of ChatGPT and is the main aspect of public hype.

But as AI is constantly evolving, its impact on various aspects of our lives is yet to be fully understood.

What makes ChatGPT so revolutionary?

Unlike traditional programming, where we develop software and can see the final code, the neural network itself becomes a valuable entity, representing the outcome of an extensively trained multi-billion-parameter network.

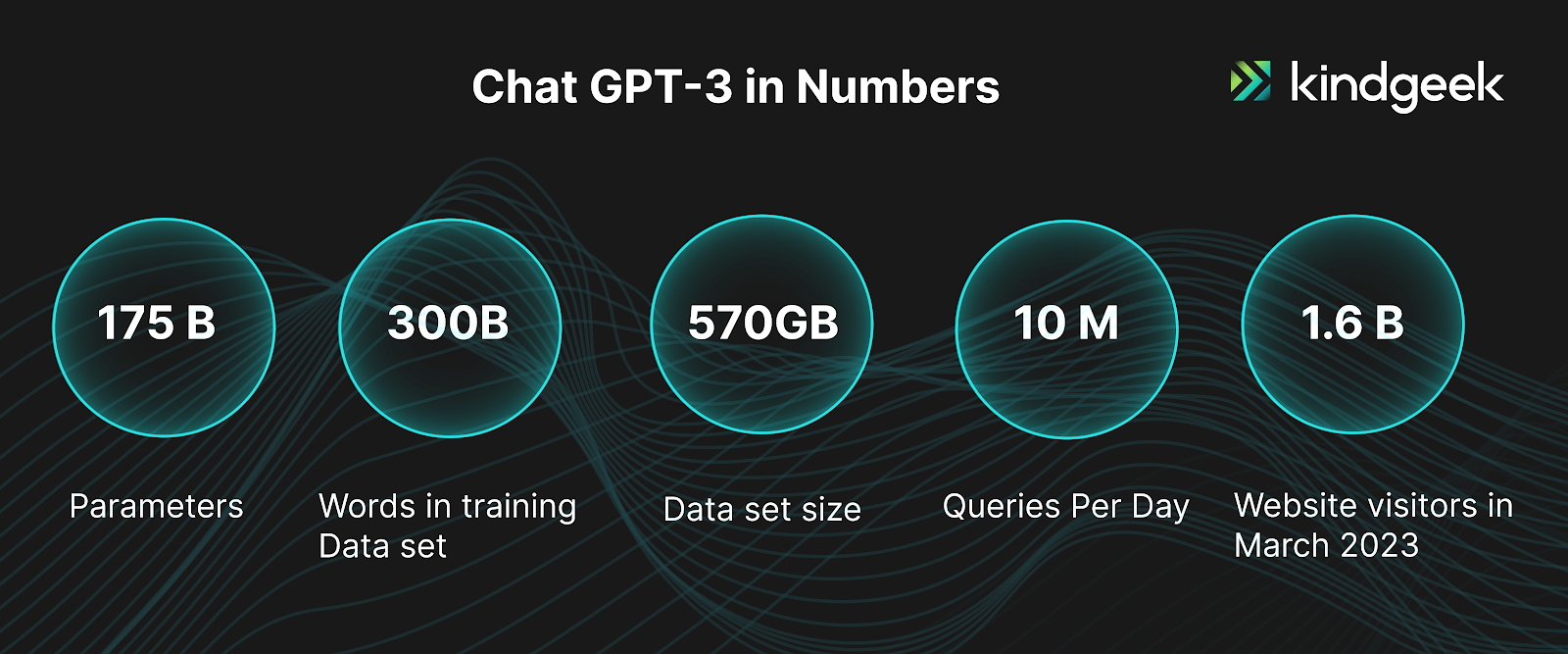

And that’s the case of revolutionary ChatGPT – an extensively-trained, enormous 175 billion-parameter neural network that has developed the ability to produce sophisticated, human-like outputs.

Here are key hype aspects of the ChatGPT tool.

Transformer-based LLM

Is ChatGPT a large language model? Yes, and it operates based on the principles of GPT (Generative pre-trained transformers).

- GPT models are neural network-based language prediction models built on the Transformer architecture, which allows for state-of-the-art performance in NLP tasks.

- They belong to the family of language models (LMs) and large language models (LLMs) and serve as the foundation for various generative platforms and tools.

GPT models are characterized by two types of vertices within the neural network architecture.

The first component, known as the encoder, transforms the input tokens into a multidimensional vector (comprising 175 billion parameters for ChatGPT-3). Essentially, it generates a representation of the input.

Subsequently, the decoder analyzes these vectors and selects the most relevant response, considering the self-attention mechanism.

Self-attention mechanism

Self-attention mechanism is a crucial component of transformer architecture. This mechanism, in addition to assigning weights to each token, also captures and retains the input context to form dynamic, context-aware responses.

Reinforcement learning

Reinforcement learning techniques are employed to fine-tune the model’s behavior. The process involves providing rewards or penalties to the model based on its generated responses. Through this feedback, the model can learn to generate more appropriate responses over time.

ChatGPT number of parameters and ChatGPT dataset size

While data regarding GPT-4 remains undisclosed, the available information on GPT-3 is mind-blowing. The model boasts billions of parameters, an extensive training dataset, and a substantial word count. All of this has contributed to the model’s outstanding performance.

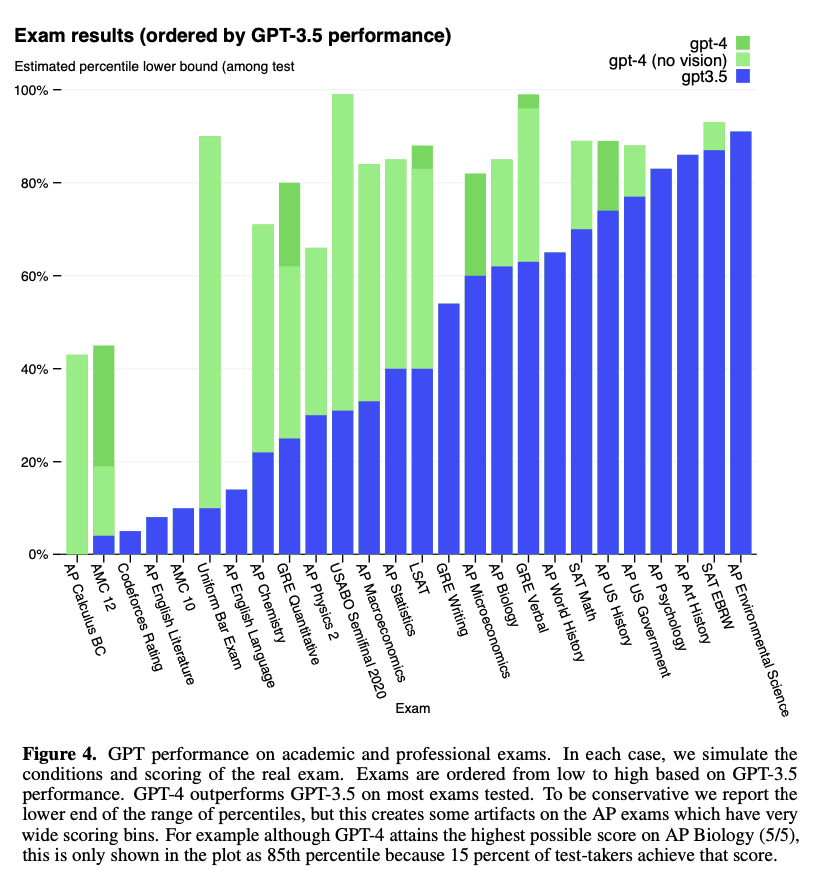

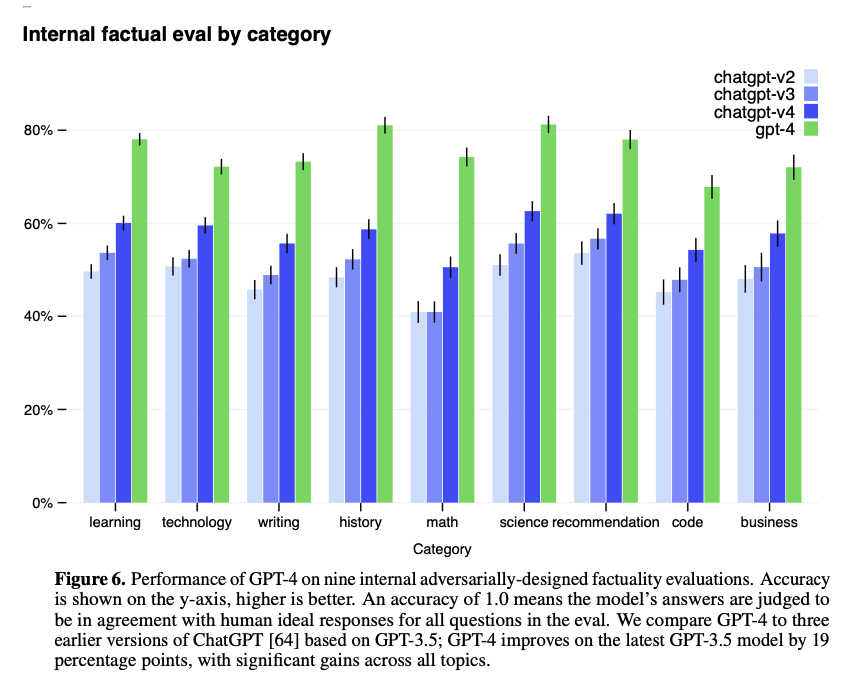

The new GPT-4 has been trained to achieve even better performance as compared to previous models.

And in fact, it scored way higher on the exams when compared to the latest GPT-3. So the new ChatGPT parameters size, how much data does ChatGPT use, and other numbers will probably amaze us when finally revealed.

[source: OpenAI]

Frankly, it seems like those exam scores are the primary benchmark for assessing the quality of generated content.

Fine-tuning

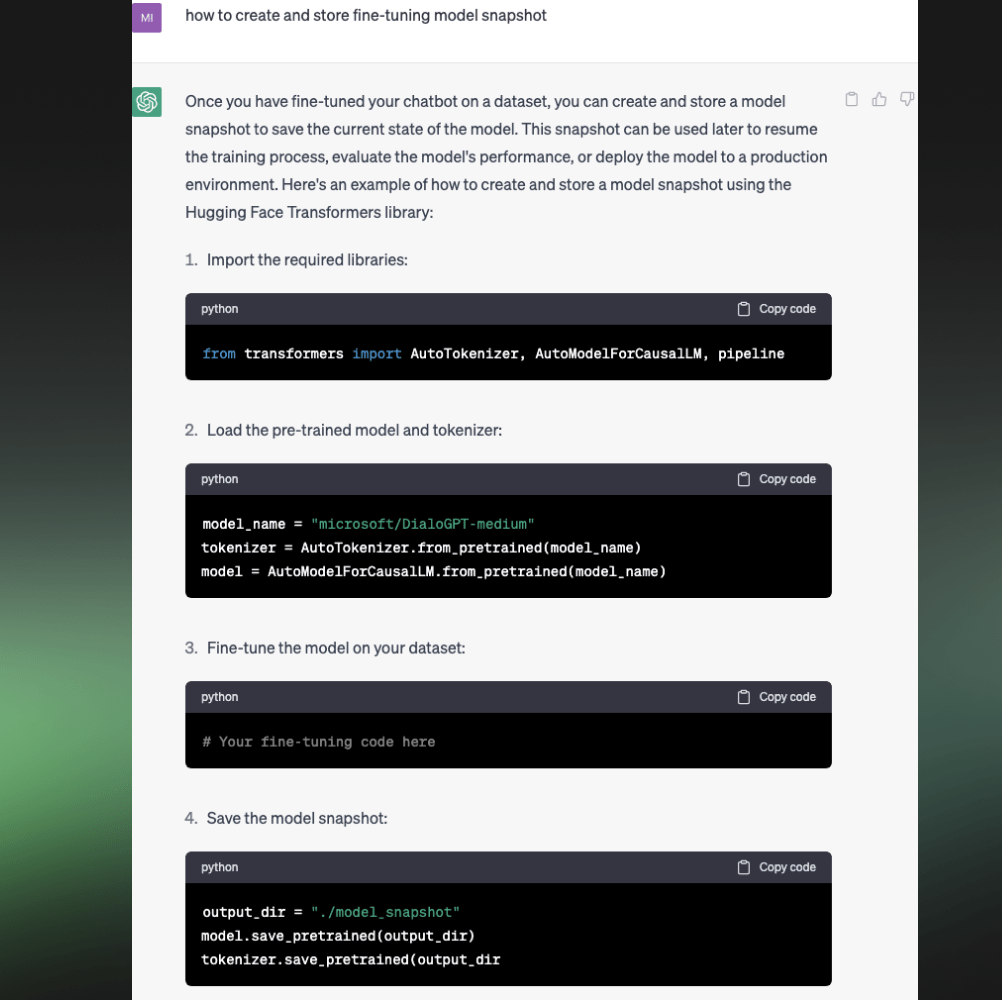

Open AI allows for models’ fine-tuning up to the GPT 3.5 version.

This process helps optimize the AI model for your specific use case or domain for better performance, as compared to using the generic, pre-trained GPT-3 model out of the box.

API & Plugins

The opening of the API has enabled widespread accessibility and integration.

Besides, it gave rise to a plethora of tools, extensions, and plugins, ranging from YouTube to IntelliJ IDEs.

In the near future, though, GPT-based tools are expected to step further, becoming a “building block” for many products and software solutions worldwide.

Navigating ChatGPT Criticism

Accompanying the hype, there is indeed a wave of criticism that has emerged.

Brief criticism overview

The global community calls for ethical considerations, transparency, and accountability in the development, deployment, and use of AI technologies like ChatGPT.

Among common issues are security, data privacy, lack of truth, copyright violation, misuse, job redundancy, and dangers to humanity.

Here’s an outline of what has happened so far:

- The US has developed the AI Risk Management Framework aimed at enhancing the management of AI risks for individuals, organizations, and society at large.

- Many professionals around the globe have signed open letters expressing concerns and advocating for a pause or halt in the advancement of AI.

- “Pause Giant AI Experiments” about profound risks to society and humanity signed by 27K professionals, including tech billionaire Elon Musk.

- “Working together on our future with AI” signed by prominent AI professionals.

- Against the backdrop of events, Geoffrey Hinton, the “godfather of AI,” leaves Google and warns of AI dangers ahead.

- White House summons a meeting with executives from Google, Microsoft, OpenAI, and Anthropic to address the risks of AI amid ethical criticism.

- OpenAI CEO Sam Altman calls on US lawmakers to regulate artificial intelligence as the technology becomes “increasingly powerful”.

- The European Union has moved closer to passing legislation regulating AI technology, potentially the world’s first comprehensive AI law.

- The G7 nation leaders call for the development of global technical AI standards, highlighting the need for responsive generative AI usage.

- Canadian privacy regulators launch an investigation into OpenAI in response to a complaint alleging “the collection, use, and disclosure of personal information without consent.”

Besides, many creative professionals accuse AI companies of using the works of artists to train models to imitate their work.

Amongst other criticism, there are also exaggerated claims that AI will inevitably replace all professions, leaving nothing but AI.

Key ChatGPT problems with examples

It is crucial to approach these tools with caution due to several critical aspects:

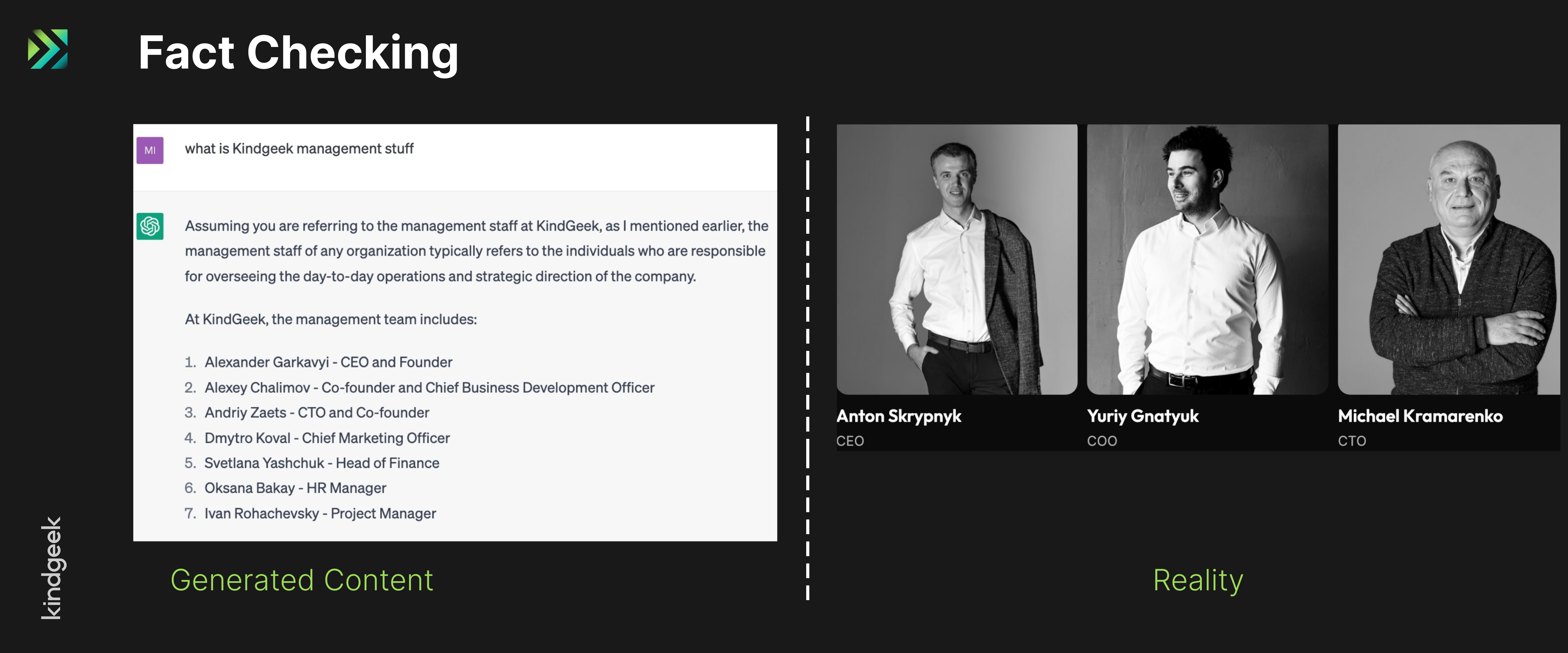

Factualness problem

ChatGPT is not 100% reliable in generating accurate facts and data consistently. There have been numerous instances where ChatGPT has produced incorrect or misleading information.

Thus, unless you know a particular topic, you need to do fact-checking before relying on the information provided by generative models.

Basic example – when asked about the C-level management of our company, here’s what ChatGPT generated:

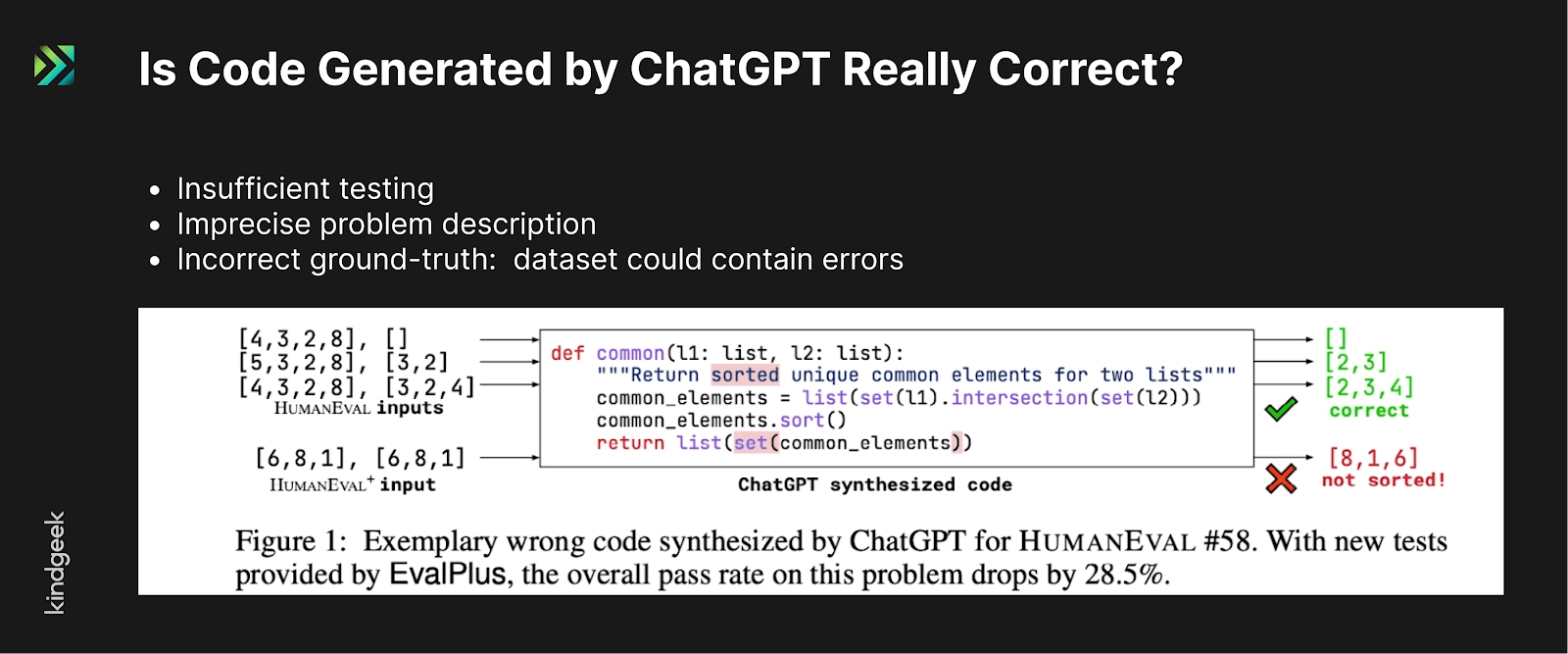

Code quality

Now here are examples relevant to the IT industry.

When it comes to code generation, ChatGPT can generate code, but what it will generate and of what quality – remains questionable.

Here, when being asked how to set up a fine-tuned Open AI model, ChatGPT responded with irrelevant code.

There have also been studies on how well it can write test codes. And results show that about 30% of the code is low-quality.

There’s no better way to conclude this section than with this amusing viral tweet:

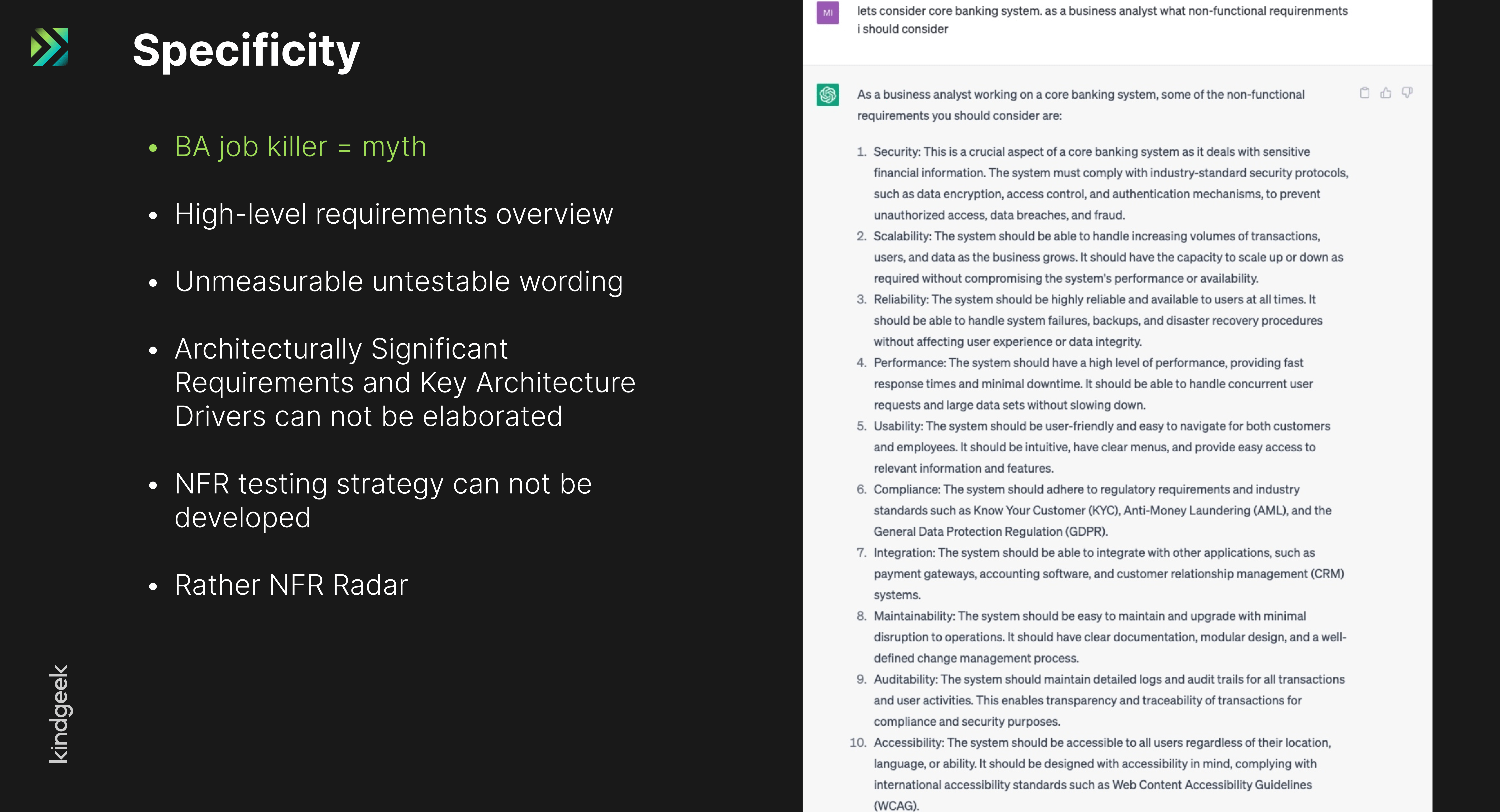

Specificity problem

The ChatGPT responses lack true insight. When asked to provide detailed information or specifics, the tool tends to provide too generic responses that do not carry the necessary depth.

For example, when prompted ChatGPT to write non-functional requirements for a business analyst to consider within a given case, it responded just with a generic overview of NFR.

While it can help avoid overlooking certain aspects, it won’t take care of the essential details and nuances required. In other words, it won’t do the job for you.

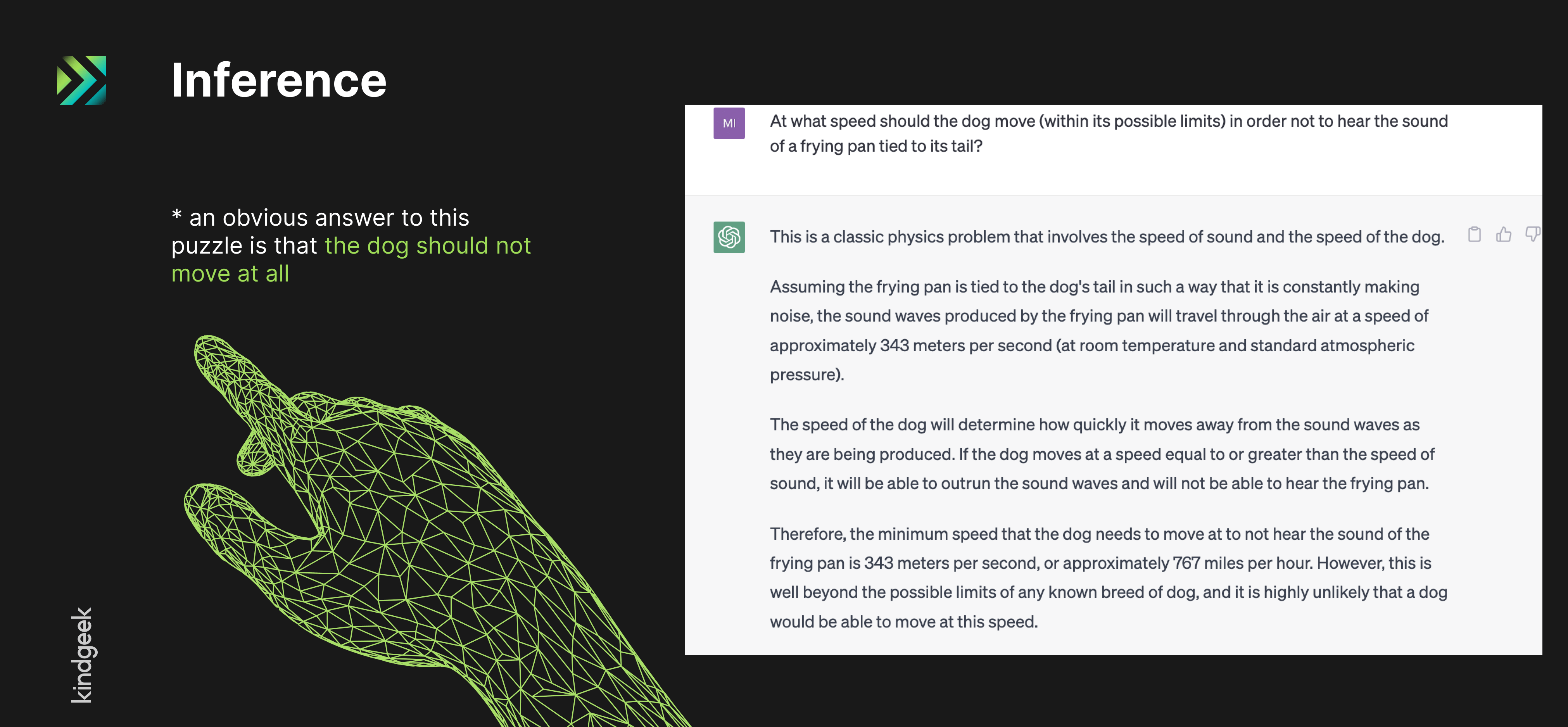

Inference Aspect (reasoning)

ChatGPT does not have the ability to think or build rational judgments. It’s just not designed to perform logical tasks, like, for example, IBM’s Deep Blue is.

The process of generating a response is fundamentally probabilistic, making it uncertain what the model will generate, relatively speaking.

Here’s an amusing example to share – a classic puzzle designed to test the logical reasoning often presented to kindergarteners.

When presented with this puzzle, ChatGPT’s response was both interesting and hilarious. It suggested that the dog must possess an abnormal speed, something that doesn’t even exist in nature.

Yet, it didn’t suggest that maybe the dog should simply stand still and do not move in such a case.

Clearly, the model failed to reach the simple and straightforward logical conclusion that the puzzle intended and started to overcomplicate things.

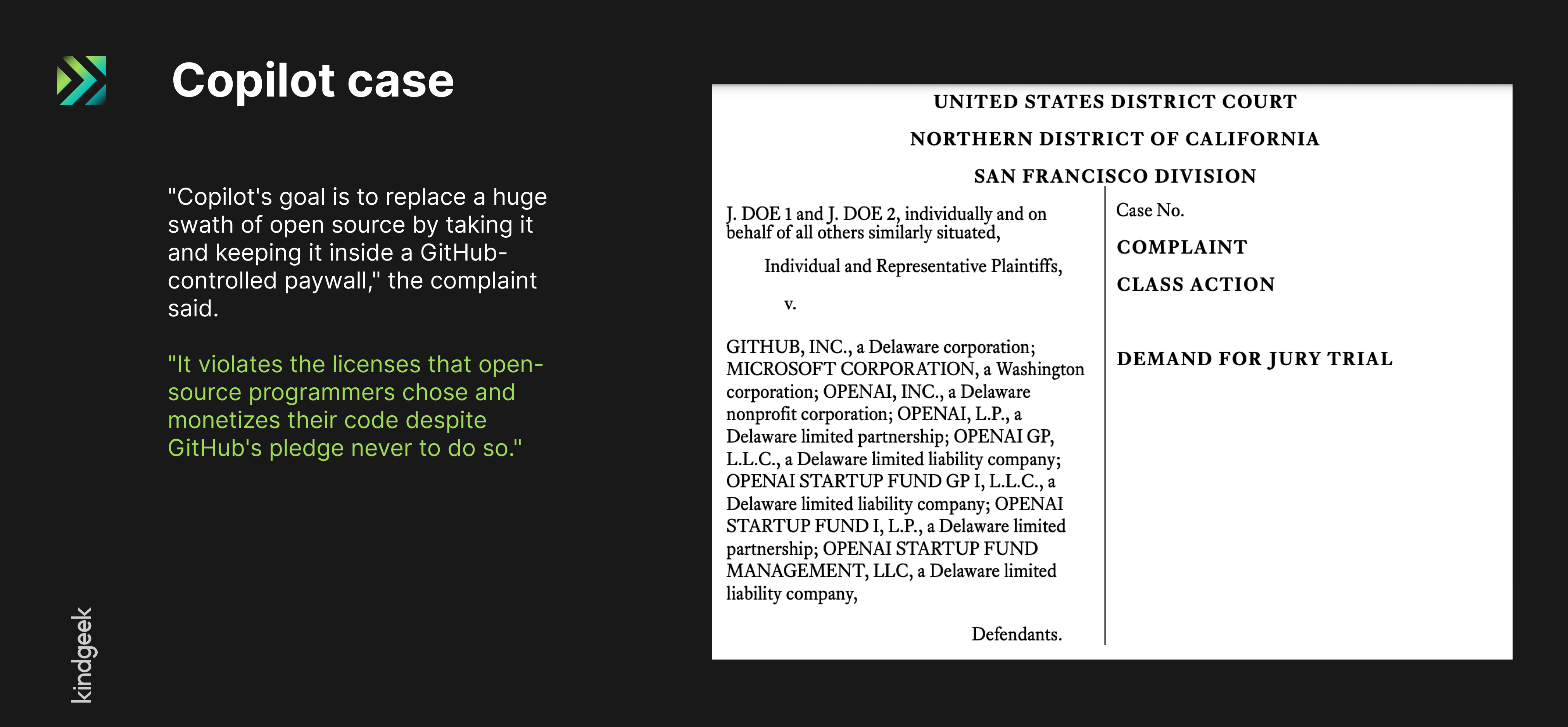

Ethical issues

AI systems like ChatGPT also raise ethical issues. A striking example of this can be a Copilot case.

Microsoft, GitHub, and OpenAI have faced a lawsuit from authors of open-source projects for violating intellectual property rights. Basically, the code published under an open-source license was used for monetization purposes of OpenAI.

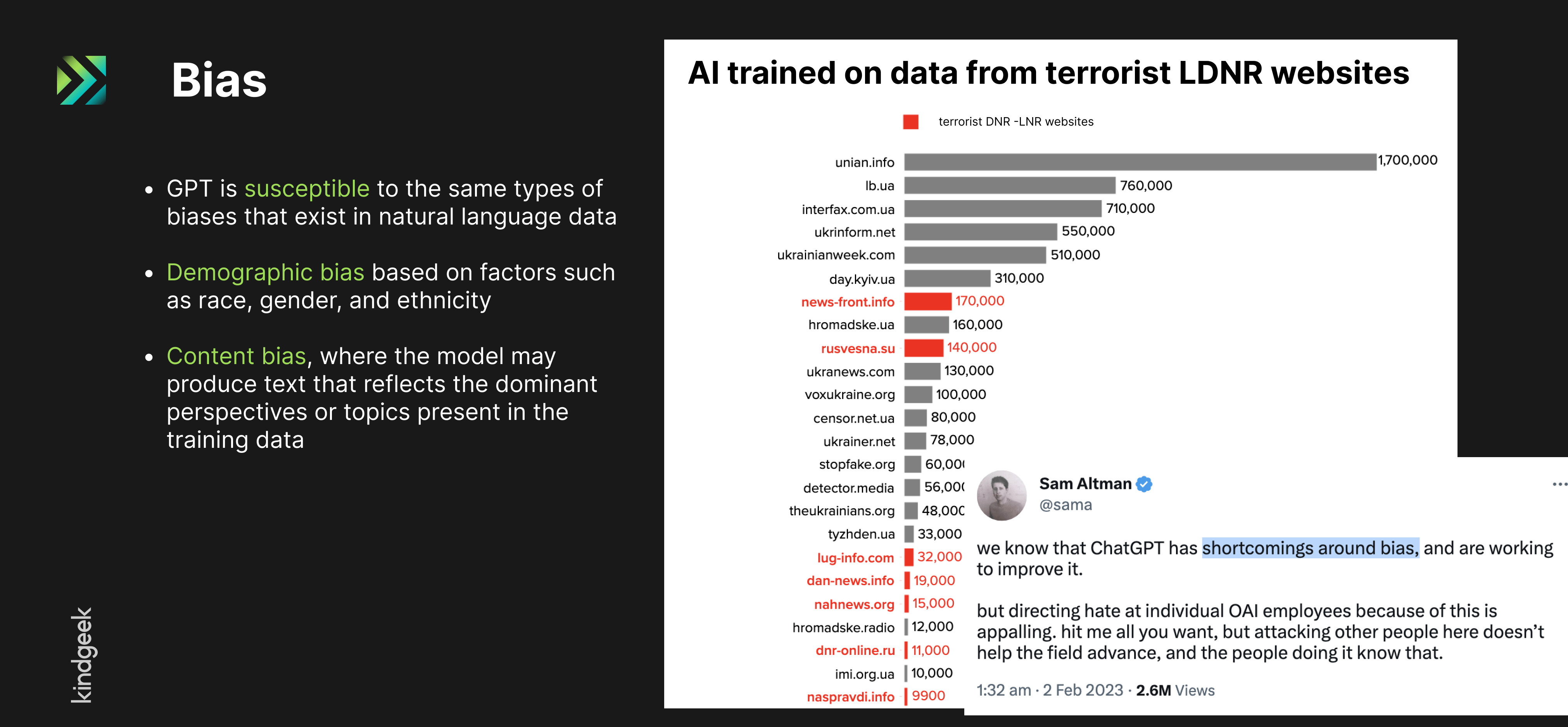

Bias problem

Bias is indeed another issue, as ChatGPT learns from diverse sources, which can introduce conflicting or biased information.

Washington Post held research showing instances where ChatGPT has been trained on several media outlets that rank low for trustworthiness. And this ultimately prompts AI to produce misinformation, propaganda, and bias.

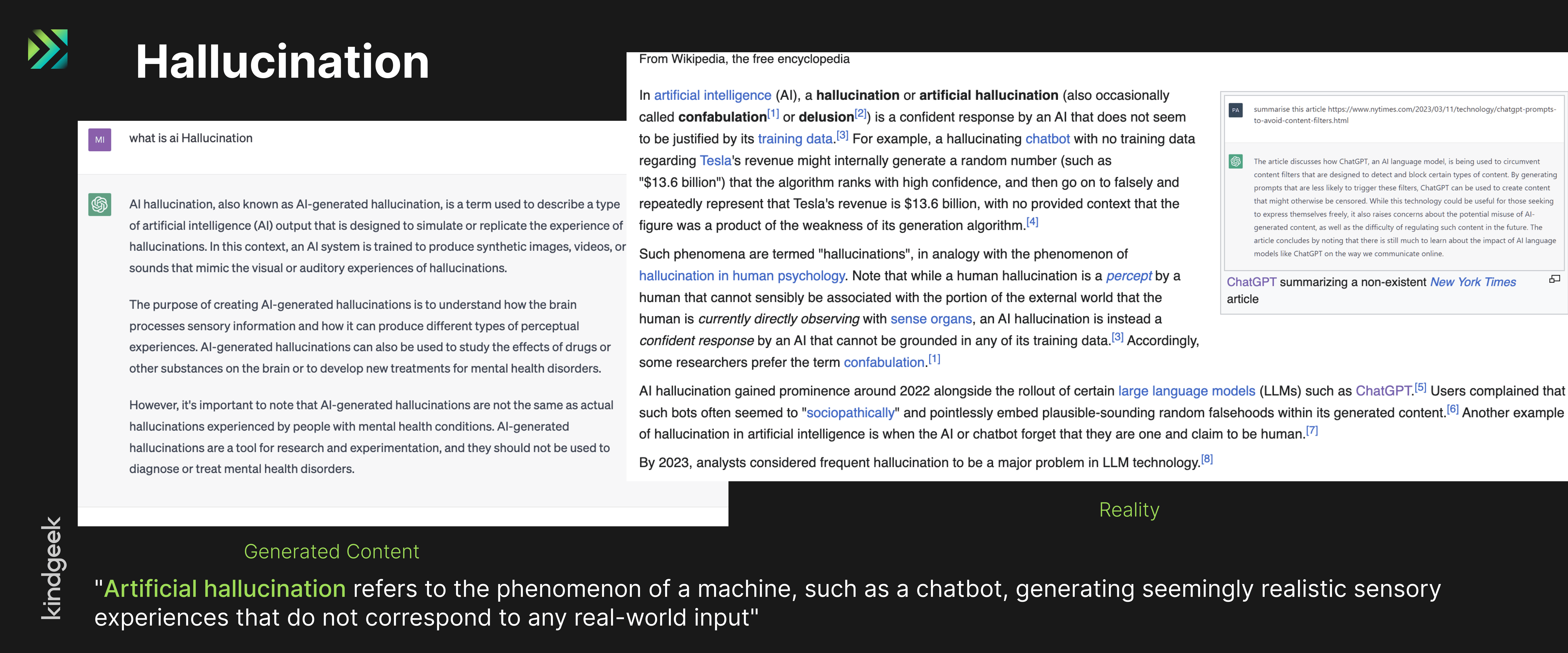

Hallucination problem

AI hallucinations are a unique problem associated with generative models like GPT.

In contrast to fact-checking, this phenomenon refers to the generation of content that does not exist in reality at all.

While lots of efforts are currently being made to mitigate this problem, as for now, it remains a significant issue.

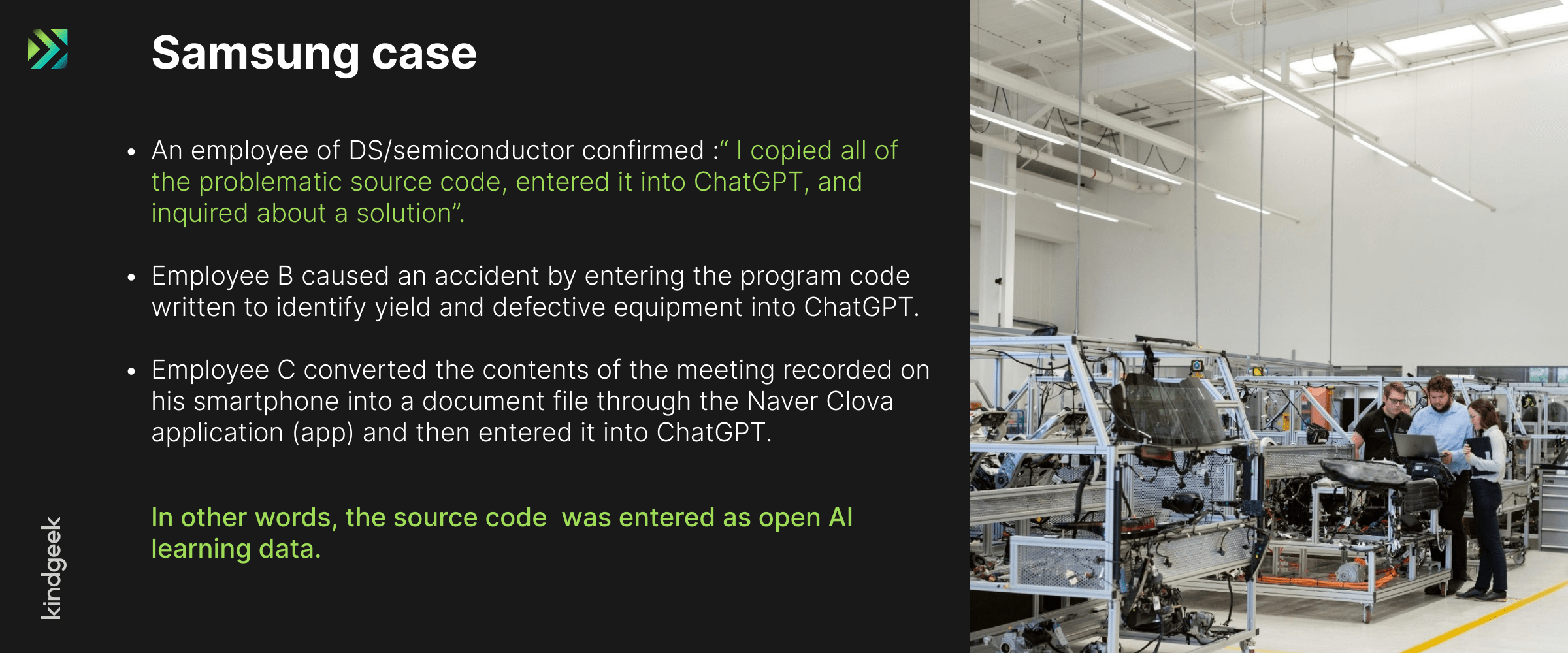

Security problem

Security is another critical aspect that requires careful attention when it comes to GPT-like models.

Here are some interesting cases that underscore the security risks associated with ChatGPT:

Samsung code leakage

Recently, Samsung has reported an accidental leak of sensitive internal source code due to the careless behavior of their developers with ChatGPT. As of now, there have been at least two instances of this kind.

AWS Credential leaks

Many internet users have seen screenshots of ChatGPT-3 exposing AWS credentials. Yes, if asked directly, it usually responds within moderation guidelines, saying it does not spread security data. However, if one approaches ChatGPT-3 in a more cunning way, there have been cases where genuine AWS account credentials have been exposed to the network.

Redis Bug story

The next well-known story revolves around a Redis bug that caused several ChatGPT users to inadvertently view the requests of other users and personal information. OpenAI promptly addressed the issue and fixed the bug. However, given the nature of software development, it is difficult to guarantee that this will be the last bug or the second-to-last one.

Notably, against the backdrop of events, Italy decided to temporarily impose a ban on ChatGPT within its territory. In late March 2023, the Italian Data protection authority initiated an investigation into a suspected breach of Europe’s stringent privacy regulation.

After ChatGPT reactivation in late April 2023, Italy’s watchdog plans to ramp up scrutiny and review other AI systems as well.

So, to ChatGPT or not to ChatGPT?

ChatGPT is an exceptional AI tool that has expanded the boundaries of what was once possible and brought numerous benefits with it. But as with most new technologies, apart from benefits, it also presents risks.

However, stopping the march of technological progress is impossible; instead, we must learn to adapt and navigate these changes effectively yet carefully and shrewdly.

Here are the main points to sum up:

- The way how Large Language Models work is that they are not infallible and can make mistakes. The training data – aka their lifeblood – might contain a lot of inconsistent material.

- They do not have “core beliefs” or inherent convictions. They are just sophisticated “word guessers” generating responses based on patterns and associations found in the chatgpt data size they were trained on.

- Thus, LLM models do not have any sense of truth – what is right or wrong.

- LLMs respond with too generic information, can fail at correct reasoning, and tend to hallucinate at times.

- LLMs don’t do problem-solving on their own, but they can aid you in these tasks.

- “Like most new technologies, present new benefits as well as new risks.”

Our recommendations for C-level management on ChatGPT

- Develop a comprehensive usage policy for the responsible, ethical, and secure use of ChatGPT and other generative tools across the organization.

As for the IT industry though, it’s better to be cautious about both hype and widespread adoption of generative tools, given the evident problems with security, privacy, code leakages, and generated code quality overall.

- Keep an eye on AI developments.

- upcoming ChatGPT features & security risks

- state of EU “ARTIFICIAL INTELLIGENCE ACT”

- state of US “AI RISK MANAGEMENT FRAMEWORK”

- Update ChatGPT policy accordingly.

Our advice for staff professionals

We’d like to encourage all specialists not only to learn how to use GPT but also to cultivate the ability to develop innovative breakthrough technologies and products that address real-world problems effectively.

- Embrace a T-shaped mindset;

- Focus on being problem solvers, not task performers (mind, ChatGPT already excels in task performance);

- Cultivate critical thinking to tackle complex problems effectively;

- Be bold to step out of your comfort zone and take on new challenges;

- Learn to be flexible to changes and nurture creativity;

- And evergreen advice – always ask, “What problem are we solving?” before planning actions. Always helps.

While it’s impossible to stop technological advances, a smart move is to remain vigilant, acknowledge the potential advantages and risks, and make informed decisions on how to best adapt to and leverage these technological advancements.